ResNet

ResNet is the most popular architecture for classifiers with over 20,000 citations. The authors were able to build a very deep, powerful network without running into the problem of vanishing gradients.

It is also often used as a backbone network for detection and segmentation models.

ResNet is often noted together with a number (e.g., ResNet18, ResNet50, ...). The number depicts the depth of the network, meaning how many layers it contains.

Intuition

One would assume that stacking additional layers to a deep neural network would improve its performance because it could learn more features.

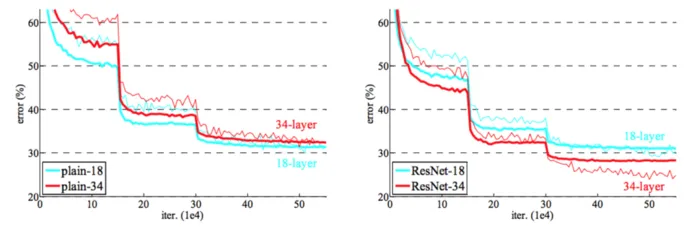

However, the original ResNet paper authors showed that this is not the case, but that just adding more layers to a network will actually lead to a performance decrease at a certain amount of layers because they simply pass over the same features again and again.

Residual Blocks

As a solution, the authors proposed residual blocks, an “identity shortcut connection” that skips one or more layers, as shown in the following figure:

Due to this skip connection, the output of the layer is not the same now. Without using this skip connection, the input ‘x’ gets multiplied by the layer's weights, followed by adding a bias term. Next, this term goes through the activation function f(), and we get our output as H(x).

Now with the introduction of the skip connection, the output is changed to:

The skip connections in ResNet solve the problem of vanishing gradient in deep neural networks by allowing this alternate shortcut path for the gradient to flow through.

Using ResNet has significantly enhanced the performance of neural networks with more layers, as shown above.

Parameters

Depth of the resnet model

It is the number of layers in the ResNet model. In model playground, it can be chosen from:

Weights

It's the weights to use for model initialization, and in Model Playground ResNet18 ImageNetweights are used.