Recall score

If you have ever tried solving a Classification task using a Machine Learning (ML) algorithm, you might have heard of a well-known Recall score ML metric. On this page, we will:

- Сover the logic behind the metric (both for the binary and multiclass cases);

- Check out the metric’s formula;

- Find out how to interpret the Recall value;

- Calculate Recall on simple examples;

- Dive a bit deeper into the Micro and Macro Recall scores;

- And see how to work with the Recall score using Python.

Let’s jump in.

What is Recall in Machine Learning?

With the Accuracy score having two drawbacks, such as the imbalance problem and being uninformative as a standalone Machine Learning metric, Data Scientists developed two new metrics that addressed the disadvantages of Accuracy and gave researchers a better view of a model’s performance.

Up-to-date, these metrics are widely used to evaluate Classification algorithms across the industry. They are called:

- Precision;

- And Recall.

To define the term, in Machine Learning, the Recall score (or just Recall) is a Classification metric featuring a ratio of predictions of the Positive class that are Positive by ground truth to the total number of Positive samples. In other words, Recall measures the ability of a classifier to detect Positive samples.

To evaluate a Classification model using the Recall score, you need to have:

- The ground truth classes;

- And the model’s predictions.

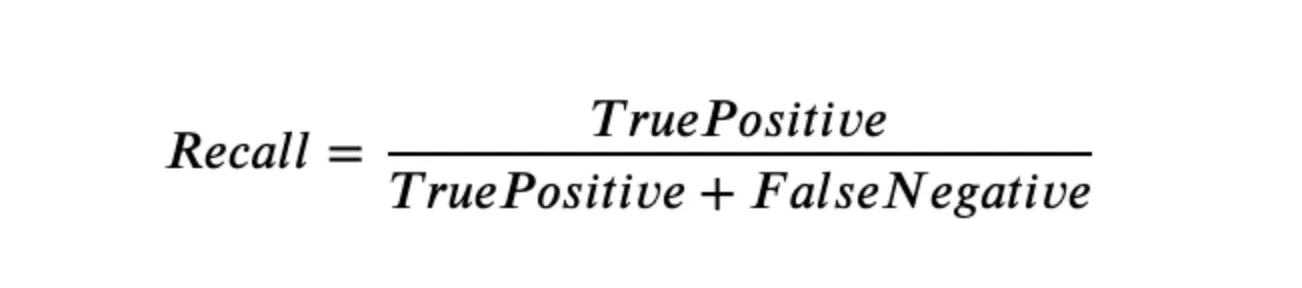

Recall score formula

The Recall score is an intuitive metric, so you should not experience any challenges in understanding it.

To get the Recall score value, you need to divide the True Positives

(the predicted class for a sample is Positive and the ground truth class

is also Positive) by the total number of Positive samples - True

Positives and False Negatives (the predicted class for a sample is

Negative, but the ground truth class is Positive).

Source

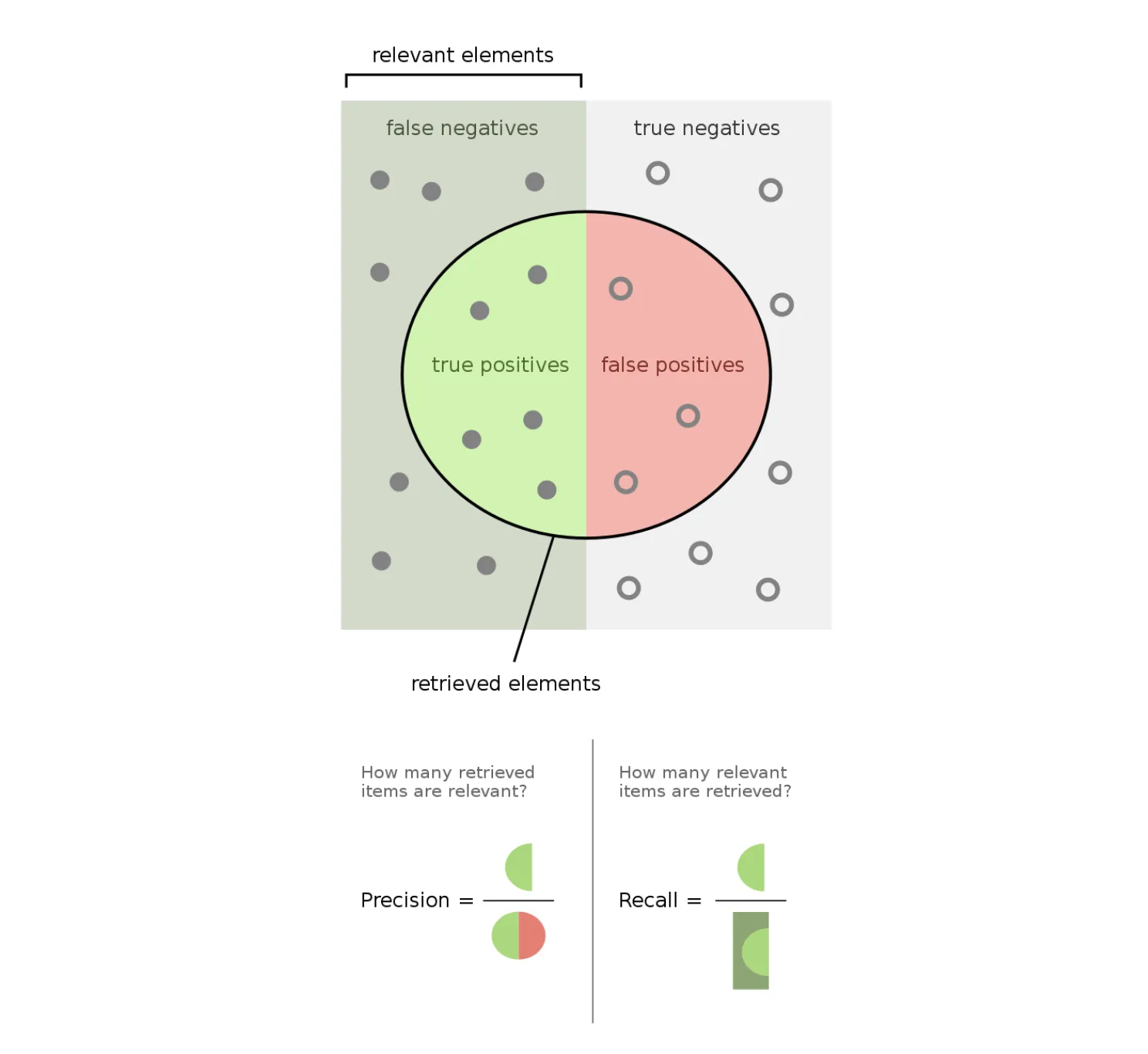

Like Precision, Recall is also independent of the class distribution, so you can effectively use it even if your data is imbalanced. To simplify the formula, let’s visualize it.

Source

As you can see, Recall can be easily described using the Confusion matrix terms such as True Positive and False Negative. Still, as described on the Confusion matrix page, these terms are mainly used for the binary Classification tasks.

So, the Recall score algorithm for the binary Classification task is as follows:

- Get predictions from your model;

- Calculate the number of True Positive and False Negative predictions;

- Use the formal Recall formula;

- And analyze the obtained value.

Multiclass Recall score

For the binary case, the workflow is straightforward. However, there are also multiclass use cases, and this is when things might get a bit tricky. In general, there are various approaches you can take when calculating Recall for the multiclass task. There are at least three different options, as you can see in the sklearn Recall score metric function:

- Micro;

- Macro;

- And Weighted.

Each of these approaches is solid and can be very helpful in model evaluation. Also, in real life, you will likely calculate the metric value using all of them to get a more comprehensive view of a problem. Please check out the micro and macro Recall score calculation examples below or the scikit-learn documentation page if you want to learn more.

So, the Recall score algorithm for the multiclass Classification task is as follows:

- Get predictions from your model;

- Identify the multiclass calculation approach you feel is the best for your task;

- Use a Machine Learning library (for example, sklearn) to do the calculations for you;

- And analyze the obtained value while keeping in mind the approach you used to get it.

Interpreting Recall score

In the Recall case, the metric value interpretation is more or less straightforward. More Positive samples detected, the higher the score, the better the result. The best possible value is 1 (if a model found all the Positive samples), and the worst is 0 (if a model did not find any).

From our experience, for both multiclass and binary use cases, you should consider Recall > 0.85 as an excellent score, Recall > 0.7 as a good one, and any other score as the poor one. Still, you can set your own thresholds as your logic and task might vary highly from ours. Also, please be careful in the multiclass cases, as you might get a model with a high metric value on one class but a low one on the other. So, always try to peek into the bigger picture. Do not rely on a single value averaged across the classes.

Understanding whether you got a high or low Recall value is good, but what does this value mean in the grand scheme of things? The higher the Recall score value, the higher the probability that the classifier will predict all the Positive samples correctly as Positive. So, with high Recall, you can trust the model’s ability to detect all Positive class instances.

Recall score calculation example

Let’s say we have a binary Classification task. For example, you are trying to determine whether a cat or a dog is on an image. You have a model and want to evaluate its performance using Recall. You pass 15 pictures with a cat and 20 images with a dog to the model. From the given 15 cat images, the algorithm predicts 9 pictures as the dog ones, and from the 20 dog images - 6 pictures as the cat ones. Let’s build a Confusion matrix first (you can check the detailed calculation on the Confusion matrix page).

Excellent, now let’s calculate the Recall score using the formula for the binary Classification use case (the number of correct predictions is in the green cells of the table, and the number of the incorrect ones is in the red cells).

- Recall = (TP) / (TP + FN) = (6) / (6 + 9) ~ 0.4

Ok, great. Let’s expand the task and add another class, for example, the bird one. You pass 15 pictures with a cat, 20 images with a dog, and 12 pictures with a bird to the model. The predictions are as follows:

- 15 cat images: 9 dog pictures, 3 bird ones, and 15 - 9 - 3 = 3 cat images;

- 20 dog images: 6 cat pictures, 4 bird ones, and 20 - 6 - 4 = 10 dog images;

- 12 bird images: 4 dog pictures, 2 cat ones, and 12 - 4 - 2 = 6 bird images.

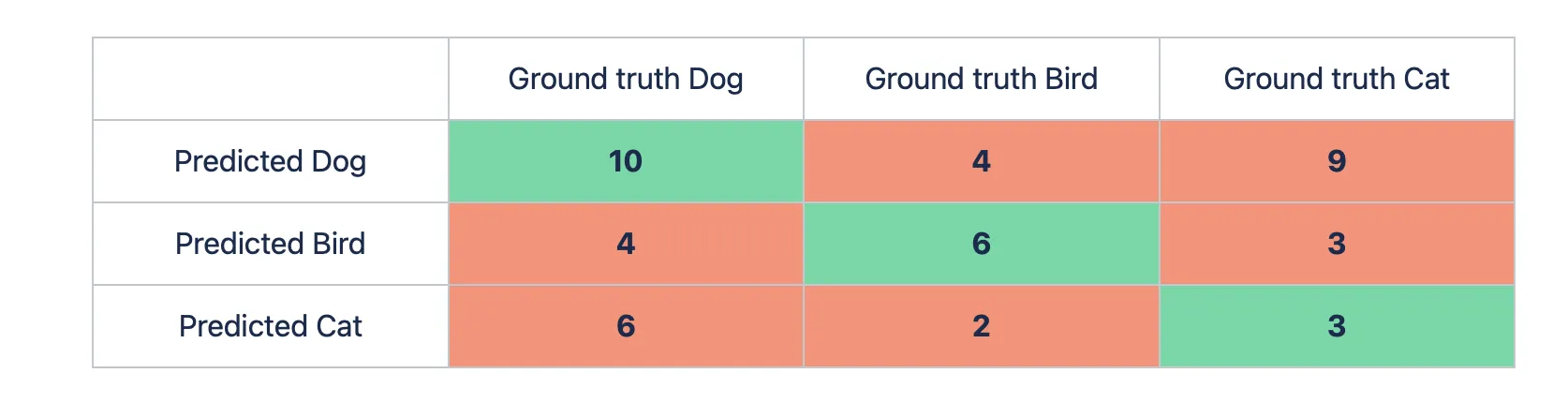

Let’s build the matrix.

Macro Recall score calculation example

Macro Recall score is a way to study the classification as a whole. To calculate the Macro Recall score, you need to compute the metric independently for each class and take the average of the sum. The Macro approach treats all the classes equally as it aims to see the bigger picture and evaluate the algorithm’s performance across all the classes in one value.

Let’s calculate the Recall value for each class. To do so, we need to go column by column (the green cell is the True Positives predictions for a specific class whereas red cells are False Negatives):

- Dog Recall: 10 / (4 + 6 + 10) ~ 0.5

- Bird Recall: 6 / (4 + 2 + 6) ~ 0.5

- Cat Recall: 3 / (9 + 3 + 3) ~ 0.2

- Macro Recall score: (Dog Recall + Bird Recall + Cat Recall) / 3 = (0.5 + 0.5 + 0.2) / 3 ~ 0.4

Micro Recall score calculation example

On the other hand, the Micro Recall score studies individual classes. To calculate it, you need to sum all True Positives and divide by the sum of all True Positives and False Negatives predictions across all the classes. Thus, Micro Recal will combine the contributions of all classes to calculate the average metric.

Let’s calculate the Micro Recal score value for our use case

- Micro Recall score: (TP Dog + TP Bird + TP Cat) / ((TP + FN) Dog + (TP + FN) Bird + (TP + FN) Cat) = (10 + 6 + 3) / ((4 + 6 + 10) + (4 + 2 + 6) + (9 + 3 + 3)) ~ 0.404

Recall score in Python

The Recall score is widely used in the industry, so all the Machine and Deep Learning libraries have their own implementation of this metric. For this page, we prepared three code blocks featuring calculating Recall in Python. In detail, you can check out:

- Recall score in Scikit-learn (Sklearn);

- Recall score in TensorFlow;

- Recall score in PyTorch.

Recall score in Sklearn (Scikit-learn)

Scikit-learn is the most popular Python library for classical Machine Learning. From our experience, Sklearn is the tool you will likely use the most to calculate Recall (especially if you are working with tabular data). Fortunately, you can do it in a blink of an eye.

In Sklearn, the Recall score can be found under the recall_score function. The full path is sklearn.metrics.recall_score.