Lion

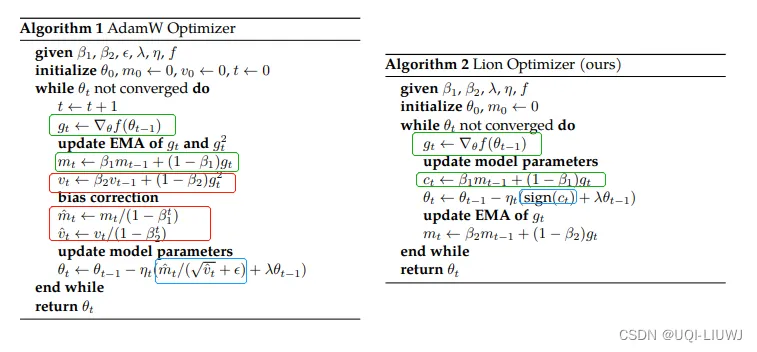

EvoLved Sign Momentum (Lion) is a Deep Learning stochastic optimization algorithm that builds upon the concept of sign momentum optimization. Lion, proposed in a research paper in 2023, aims to address the limitations of traditional optimization algorithms such as Stochastic Gradient Descent (SGD) and AdamW.

Lion is similar to AdamW with slight adjustments. Like sign momentum optimization, Lion only considers the sign of the gradient rather than the magnitude.

Lion also incorporates population-based training, where models with different hyperparameters are maintained and evolved over time. This helps to find better hyperparameters and improve the model's overall performance.

Source

In experiments conducted by the developers, Lion was shown to outperform traditional optimization algorithms such as SGD and Adam on several benchmark datasets. Lion was also found to be more robust to changes in hyperparameters and more resistant to overfitting.

Overall, Lion is a promising optimization algorithm that has shown competitive performance compared to other state-of-the-art optimization algorithms on various deep learning tasks, including image classification, object detection, and natural language processing.