Orchestrated Deployment

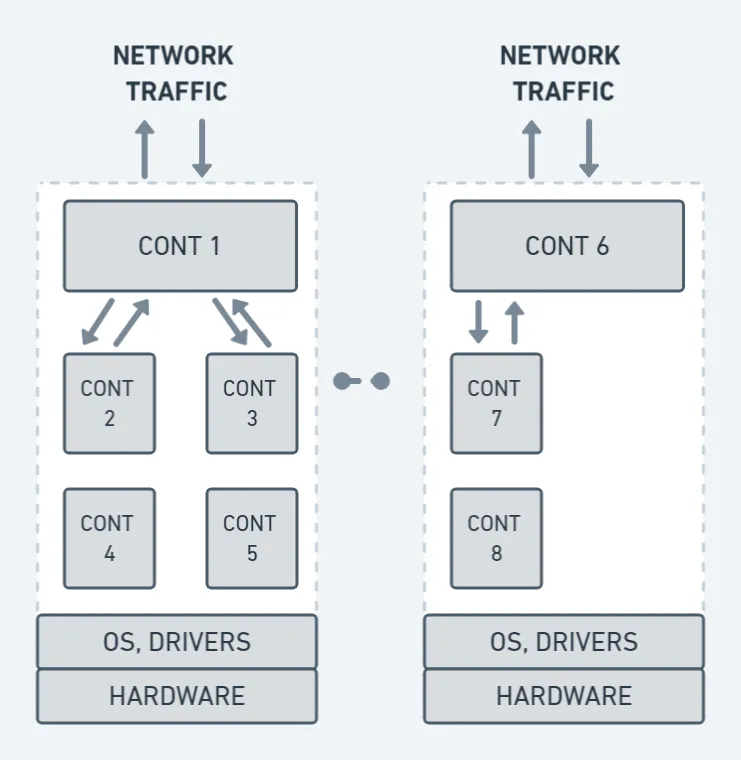

If you want to run multiple models with different logics at once, it makes sense to use an orchestrated container platform like Kubernetes. It allows you to handle multiple working streams at once.

The key benefit is scaling: If you take a typical model, such as a fraud detection model, and the model is deployed in a banking business process, the volume of transactions that are coming in for scoring may be very high during the day. However, the volume of transactions may drop during the night. So you need computational resources that can handle both peak loads while not costing you an arm and a leg when the load goes down.

Kubernetes

Kubernetes is a great container orchestration platform that can take a specific container and seamlessly scale up and down with a few command-line inputs. For example, serving 10 instances of the banking model on a regular day and then scaling it down to maybe 5 or 6 instances over the weekend.

Orchestration also aids with zero-downtime deployment: there are many scenarios when your model must be operating 24 hours a day, seven days a week, and 365 days a year, and you may have a new version of the model that you want to update with no downtime. What Kubernetes provides by default is a multi-deployment model, which includes blue and green deployment (either green or blue serving the production version, allowing you to deploy your new version to the other environment, and then switch which production environment serves production) and canary deployment.