AI Consensus Scoring for Attributes

AI Consensus Scoring (AI CS) is one of Hasty’s key features offering an AI-powered Quality assurance solution. Initially, AI CS supported Class, Object Detection, and Instance Segmentation reviews. However, you can now use it to evaluate your Attribute annotations. This is precisely what we are going to cover on this page.

Let’s jump in.

How to schedule an AI Consensus Scoring run for Attributes?

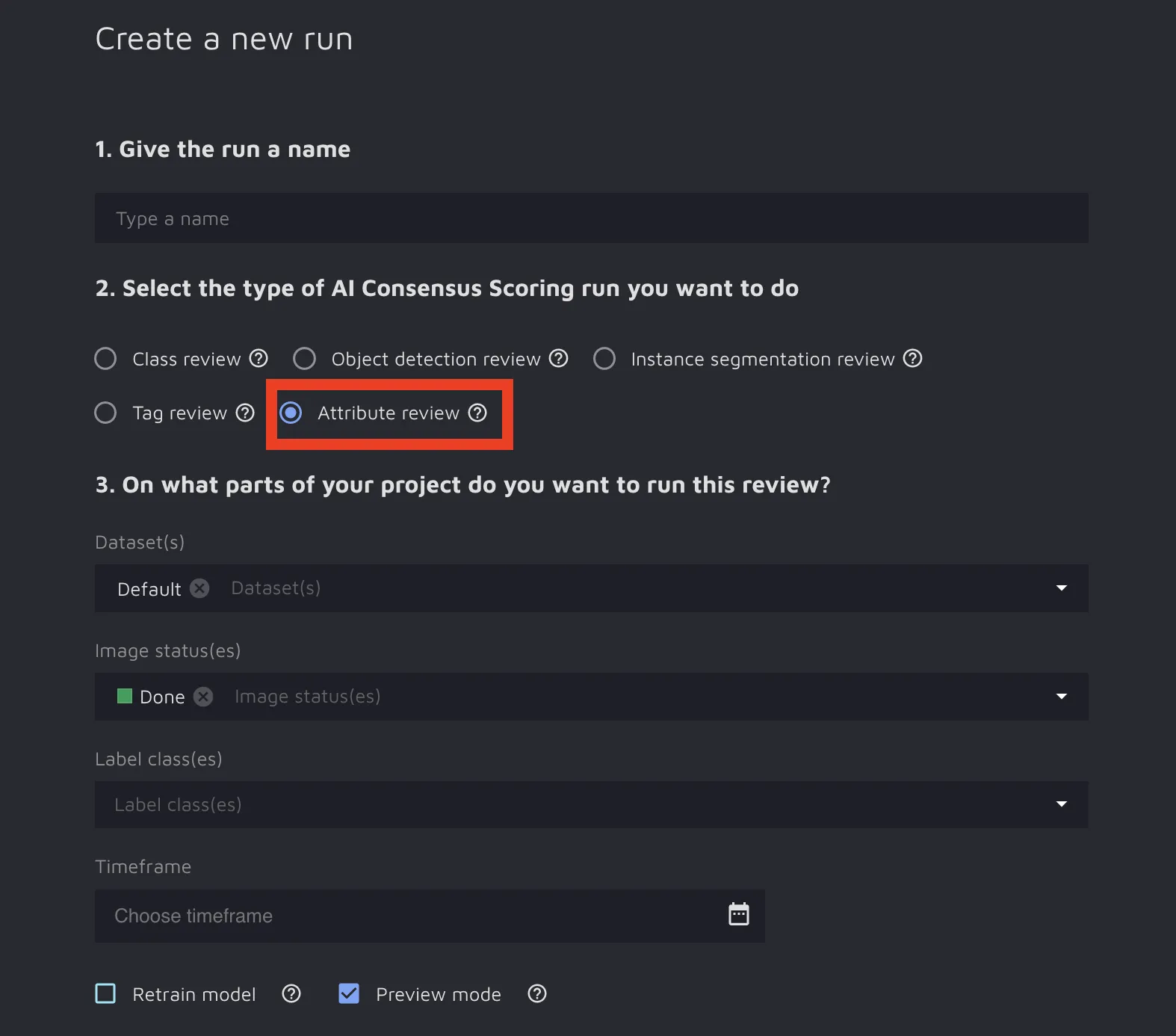

AI CS for Attributes is another option in the AI Consensus Scoring environment. So, to schedule the corresponding run, you need to:

Have Attributes in your annotations strategy and project taxonomy;

Annotate some objects using the attributes;

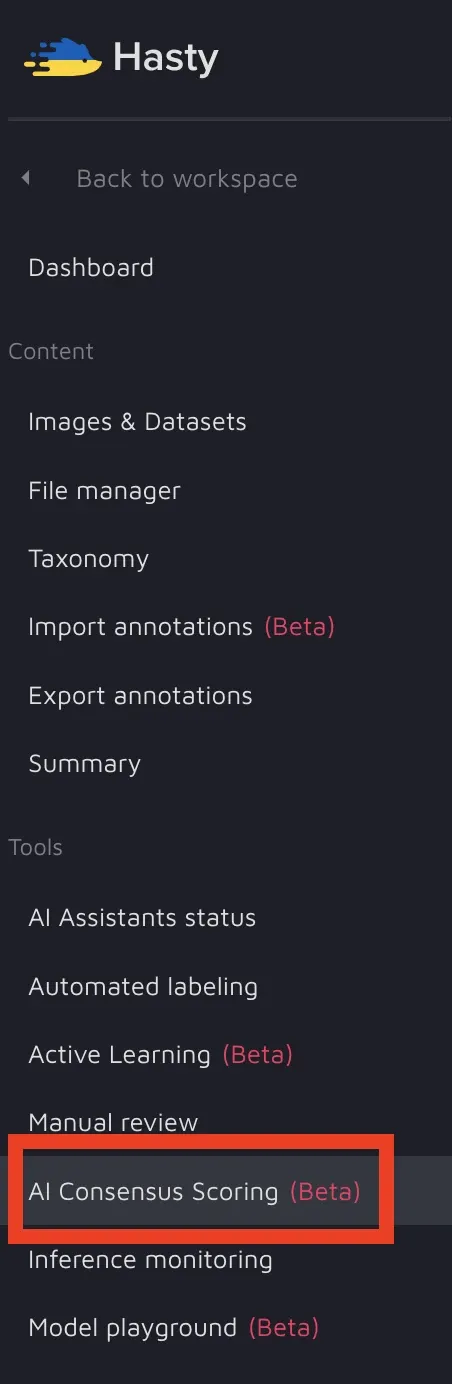

3. Navigate to the AI Consensus Scoring Environment through the project’s menu;

4. Click on the “Create new run button“ and choose “Attribute review” as the run type.

5. Once the run is complete, click on its name in the AI CS environment to see the suggestions.

How to work with AI Consensus Scoring suggestions for Attributes?

Attribute review focuses on identifying the following mistakes:

Misclassification:

For a single-choice attribute group, if AI CS disagrees that your attribute is correct;

For a boolean attribute, if AI CS disagrees that it is correct;

Missing attribute:

For a boolean attribute;

For a single-choice attribute group, if the original annotation misses any choice for it;

For a multi-choice attribute group, if the original annotation misses some choice for it;

Extra attribute:

For a multiple-choice attribute group.

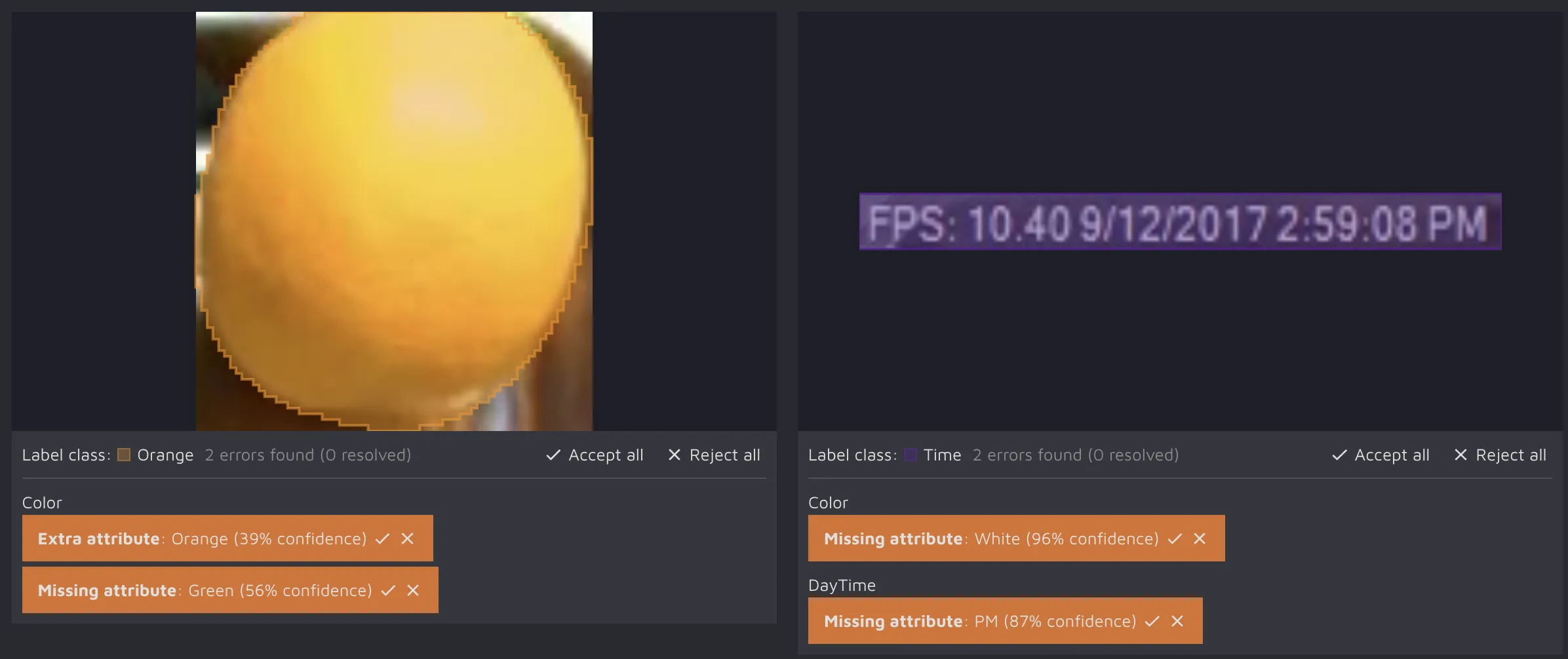

As of today, the suggestions are displayed in the following fashion:

You see the whole label with all the attributes (original and suggested) on the thumbnail card;

You can check out the confidence score for each suggestion;

You can accept or reject all the suggestions in one click by clicking on the “Accept all” / “Reject all” options on the thumbnail card. Additionally, paying attention to the colors displayed after a user’s action might be worth it. The bright one means that the attribute was either added to the image or stayed the same, and the transparent one signalizes that after user action (could be both accept or reject depending on the type of error), the attribute was not added to the image;

You can also accept or reject suggestions one by one on the thumbnail card;

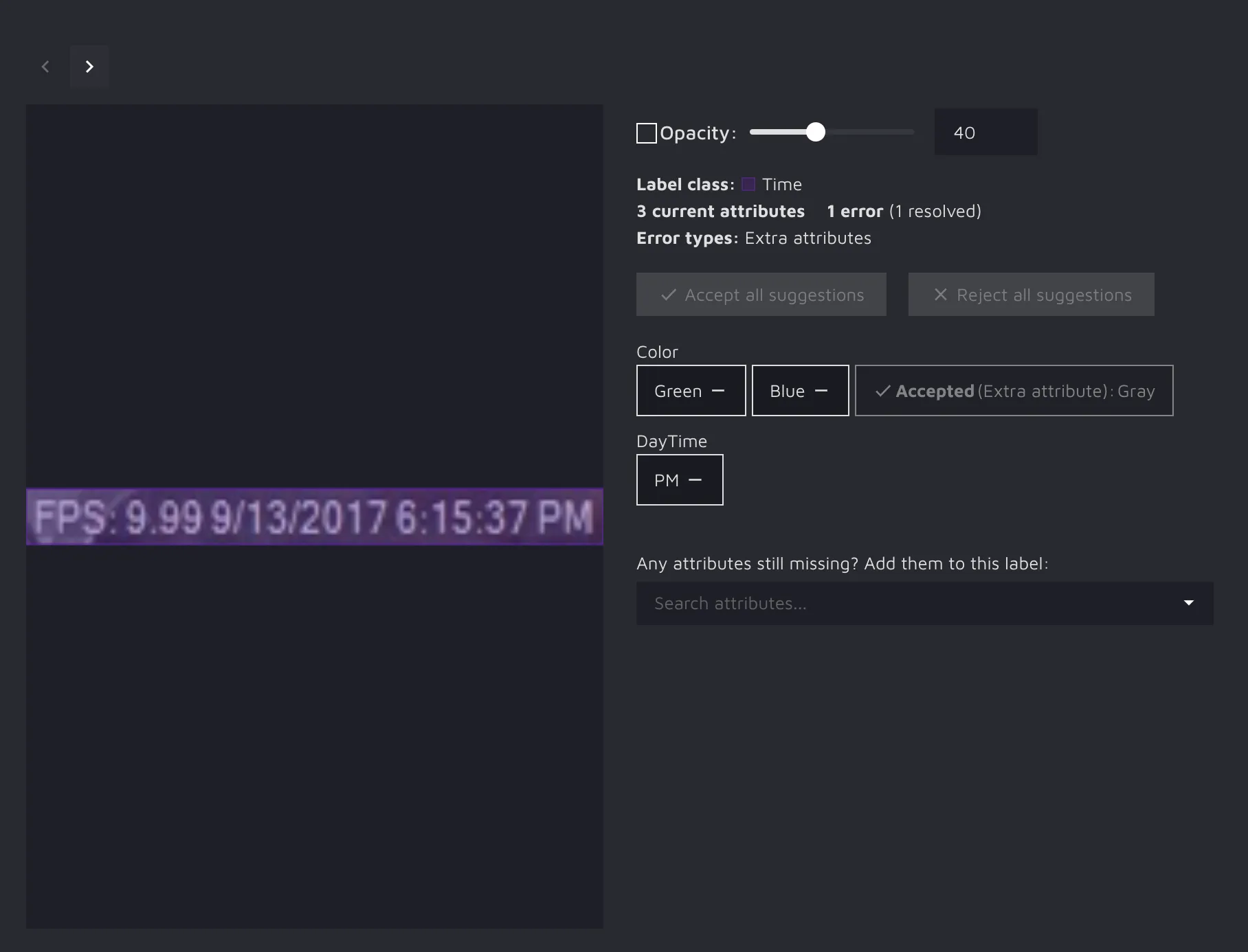

By clicking on an image, you get a more detailed overview with an additional option of adding a missing attribute directly from the AI CS environment through a dropdown menu.

As you may have noticed, AI CS results are not divided by error types. Instead, you can see all potential attribute errors per image. Still, if you want to use the filter by error type options, you can easily do so via the topbar options.