Why look for errors when you can fix them?

Instance Segmentation

Today, nearly all quality control and assurance is manual, with up to 30% of data labeling time spent shifting through images, searching for errors. The AI consensus scoring built-in the Hasty platform automates the QA process to find potential issues and a proposed fix, then serves them up to a data annotator who can quickly accept or reject the suggestion.

AI consensus scoring provides the same, or higher, data quality at a lower cost.

Object Detection

Today, nearly all quality control and assurance is manual, with up to 30% of data labeling time spent shifting through images, searching for errors. The AI consensus scoring built-in the Hasty platform automates the QA process to find potential issues and a proposed fix, then serves them up to a data annotator who can quickly accept or reject the suggestion.

AI consensus scoring provides the same, or higher, data quality at a lower cost.

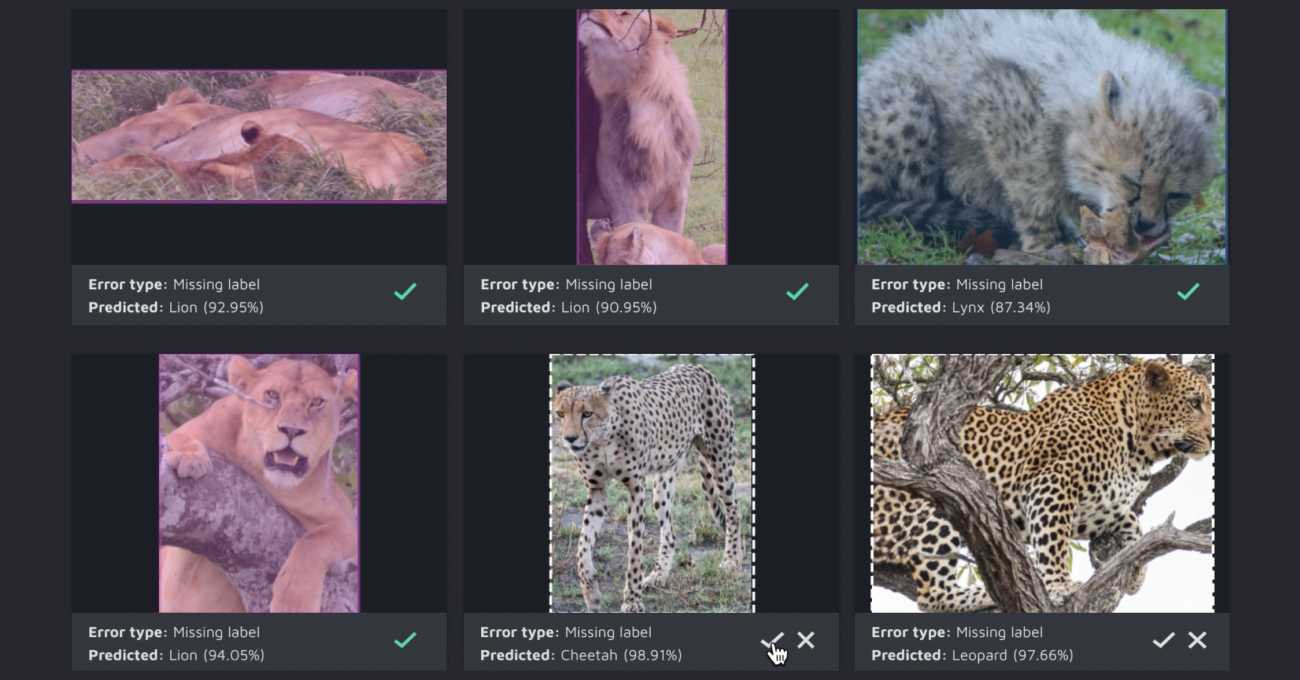

Label Classification

Today, nearly all quality control and assurance is manual, with up to 30% of data labeling time spent shifting through images, searching for errors. The AI consensus scoring built-in the Hasty platform automates the QA process to find potential issues and a proposed fix, then serves them up to a data annotator who can quickly accept or reject the suggestion.

AI consensus scoring provides the same, or higher, data quality at a lower cost.

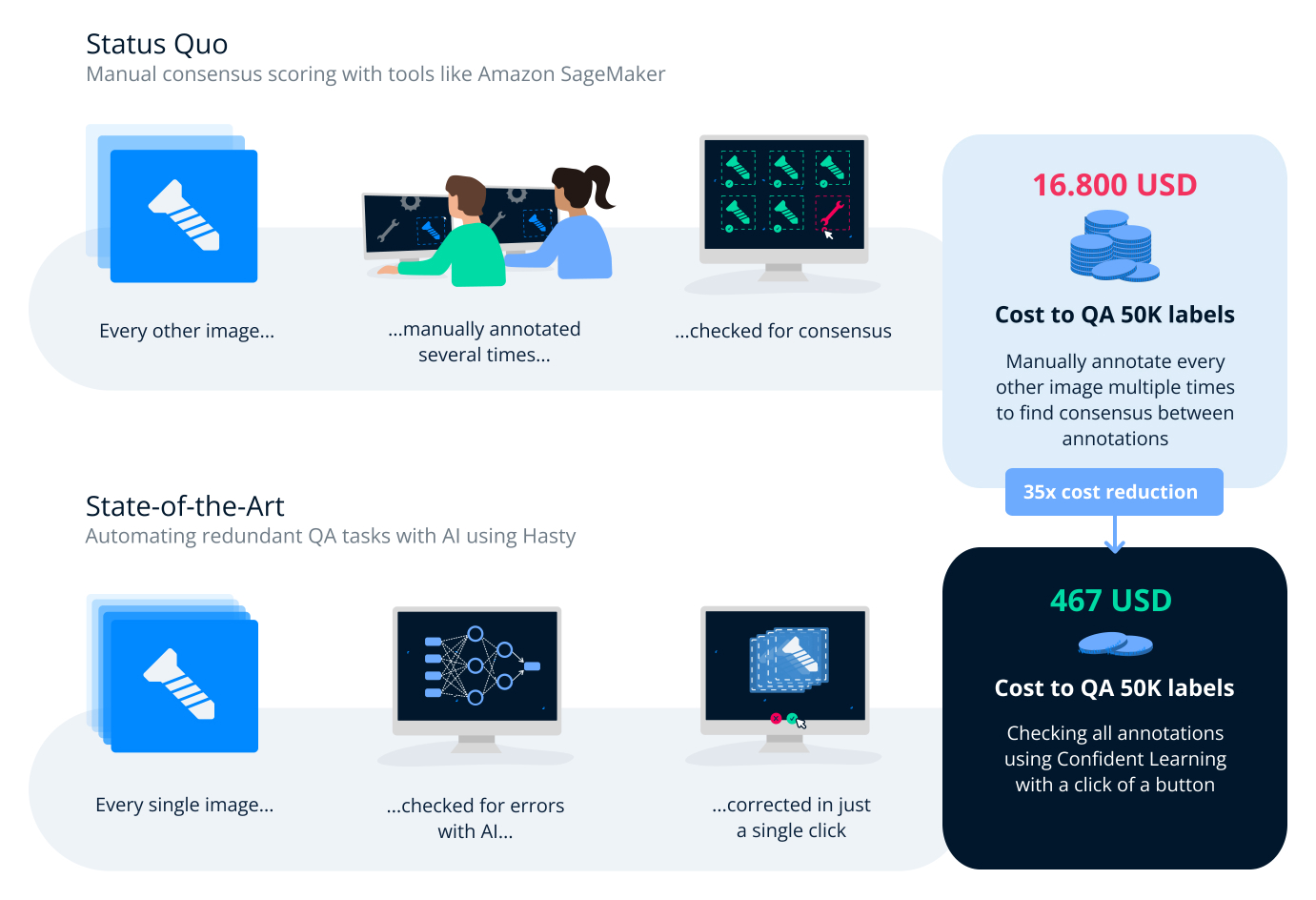

Better data for a fraction of the cost

By fixing instead of searching errors, your QA is much more productive and efficient, which drives cost savings. And because a data labeler is either accepting or rejecting what is identified as an error, you can be confident that data quality will not be negatively impacted.

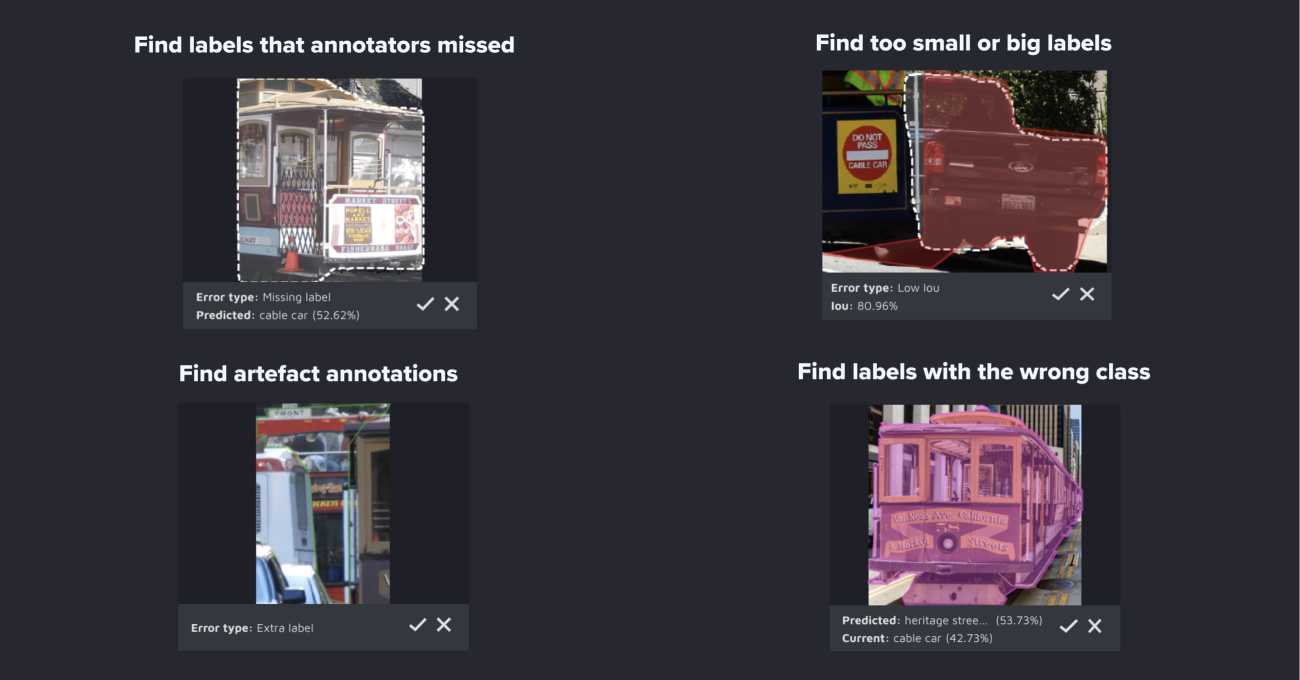

State-of-the-art AI QA’s all annotation types

Regardless of the image or video data you have, or the type of annotations being performed, our AI consensus scoring quickly finds problems and enables a fix at the click of a button.

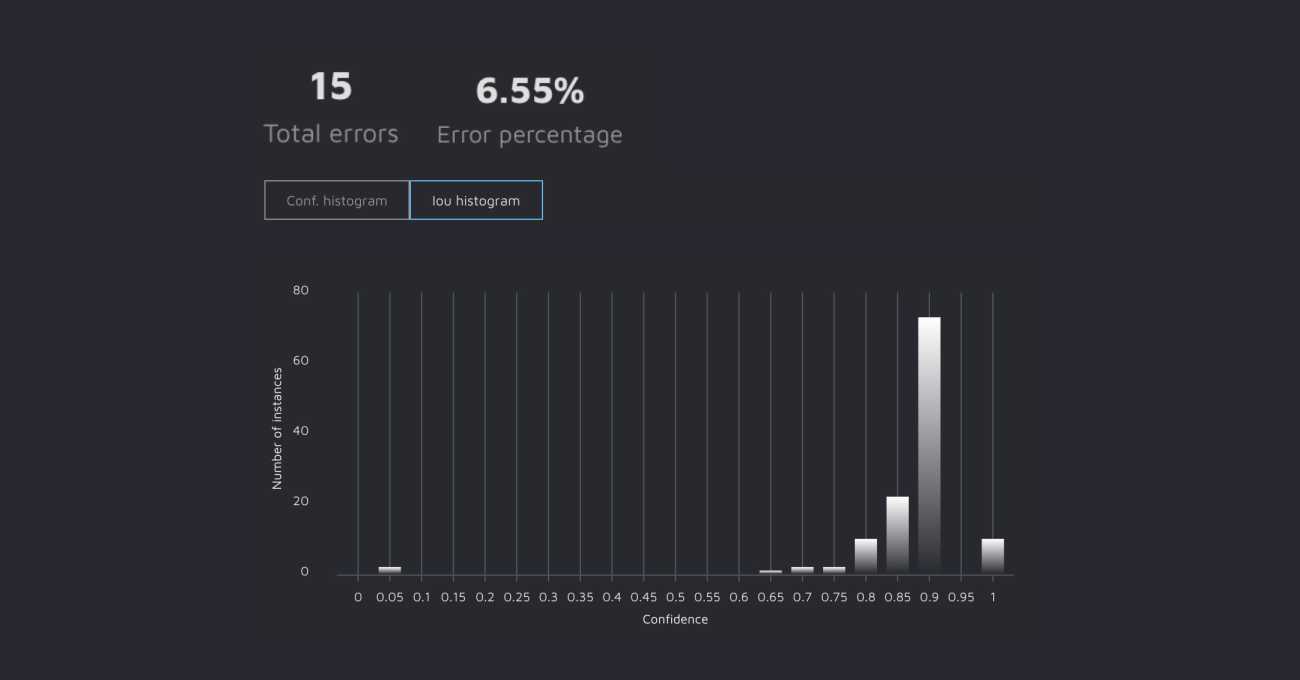

Gain valuable insight into data labeling performance

The platform reports on the number of errors found and highlights particular patterns for what the AI detects as issues within the labeling process. This provides a holistic view of your labeling operation performance, so additional guidance or training can be delivered to the data labeling team.

Before discovering Hasty, labeling images was labor intensive, time-consuming, and less accurate. Hasty’s approach of training the model while labeling with faster annotate-test cycles has saved Audere countless hours.

Jenny Abrahamson

Software Engineer at Audere

Hasty.ai helped us improve our ML workflow by 40%, which is fantastic. It reduced our overall investment by 90% to get high-quality annotations and an initial model.

Dr. Alexander Roth

Head of Engineering at Bayer Crop Sciences

Because of Hasty, PathSpot has been able to accelerate development of key features. Open communication and clear dialog with the team has allowed our engineers to focus. The rapid iteration and strong feedback loop mirrors our culture of a fast-moving technology company.

Alex Barteau

Senior Computer Engineer at PathSpot Technologies

Talk to Sales

Our team is happy to offer advice and answer questions about machine learning, AI, data labeling and data processing.